Modern Route-Full Stack GenerativeAI And Agentic AI Bootcamp

Course Fee

₹8000.00

(inclusive of GST)

Course Overview

6 Months

Start Date

March 8, 2026

Saturday & Sunday 8 Pm to 11 Pm

Full Stack Generative Ai Bootcamp

The Full-Stack Generative AI BootCamp is a 5–6 month, industry-focused program designed for AI engineers, software developers, and tech professionals who want to move beyond theory and learn how to build, deploy, and scale real-world GenAI systems.

This is not a “prompting-only” course. You will master the complete modern GenAI stack—LLMs + Fine-Tuning + RAG + Agents + Guardrails + Evaluation + LLMOps + Cloud Deployment—and learn to ship production-grade AI applications on platforms like AWS and Azure.

By the end of the bootcamp, you won’t just understand Generative AI—you’ll be able to architect and deploy enterprise-ready systems like RAG-powered document portals and autonomous multi-agent report generation pipelines, complete with safety controls, evaluations, monitoring, and scalable deployments.

What You Will Learn

Master the complete Full-Stack Generative AI lifecycle from transformer foundations and model selection to fine-tuning, RAG systems, agent orchestration, guardrails, evaluation, and scalable cloud-native deployment on AWS/Azure.

LLM Foundations & Core Concepts

Understand what powers modern LLMs from the ground up. Learn transformer architecture, how attention works, and why LLMs generalize so well. Build strong fundamentals in tokenization, text encoding, embeddings, vector similarity, and the evolution from classical NLP to modern contextual representations.

LLM Ecosystem & Model Selection

Navigate the complete landscape of modern models — LLMs vs SLMs vs Multimodal models. Compare and understand major model families like GPT, Gemini, Claude, LLaMA, Mistral, Qwen, plus efficient SLMs like Phi & Gemma. Learn a practical model selection framework based on task type, latency, cost, deployment feasibility, and modality (text / code / vision).

LLM APIs, Streaming & Provider Abstraction

Work hands-on with real commercial API ecosystems like OpenAI, Anthropic, Gemini, Groq, and Open Router. Learn how to structure production calls (system/user prompts, parameters like temperature/max tokens), implement streaming responses, handle retries, and track token usage. Build abstraction layers to switch providers seamlessly without rewriting your application logic.

Fine-Tuning (LoRA / QLoRA / PEFT)

Go beyond prompt engineering and learn how to adapt models for your own domain. Master full fine-tuning vs parameter-efficient fine-tuning (PEFT), including LoRA and QLoRA. Learn dataset preparation (instruction formatting, splits, cleaning), modern tooling like Hugging Face Transformers + PEFT, Unsloth, Axolotl, and advanced concepts like RLHF, DPO/ORPO/GRPO (concept positioning + application).

Production RAG Systems (Vector DB + Re-Ranking)

Build complete Retrieval-Augmented Generation pipelines that reduce hallucinations and deliver grounded answers. Learn ingestion and parsing (PDFs, docs, web), chunking strategies, embedding selection, metadata filtering, and vector DB integrations with Pinecone/Qdrant/Chroma. Implement robust retrieval workflows including similarity search, MMR, and cross-encoder re-ranking, plus citation-aware prompting to ensure trustable outputs.

Advanced RAG & Multimodal Systems

Take RAG from demo → production. Learn context engineering, context window optimization, caching strategies (response cache, embedding cache, CAG), and reliability evaluation methods (faithfulness, relevance, retrieval quality). Extend systems to multimodal RAG (text + image grounding with vision-language workflows), and learn how to debug common RAG failure modes like bad chunking, noisy retrieval, missing context, and overlong prompts.

Agentic AI + LangGraph Orchestration

Design autonomous AI systems that do more than chat. Learn how to build single-agent and multi-agent architectures, including supervisor, hierarchical, and network-based systems. Implement tool use (APIs, functions, search, RAG), memory + state management, role prompts (planner/executor), human-in-the-loop approvals, loop prevention mechanisms, and cost-aware execution budgeting. Learn orchestration layers and state-graphs through frameworks like LangGraph (and CrewAI/AutoGen conceptually).

Guardrails, Evaluation & Cloud Deployment

Ship production-safe GenAI applications with quality control. Learn observability (logging/tracing prompts, context, tools) and evaluation strategies like LLM-as-a-judge, offline vs online evaluation, and system-level metrics such as latency/cost/UX tradeoffs. Implement guardrails for input/output validation, schema enforcement (Pydantic), refusal logic, prompt injection defense, and safety frameworks like Guardrails.ai/OpenAI Guardrails. Finally, containerize and deploy end-to-end systems using AWS ECS/Fargate, SageMaker endpoints, API Gateway/ALB, with CI/CD and monitoring.

Projects You'll Build

In this bootcamp, you’ll gain real production experience by building end-to-end GenAI applications. You’ll ship systems that cover fine-tuning, RAG, multi-agent orchestration, guardrails, evaluation, and cloud deployment.

Project 1: Intelligent Document Portal (End-to-End RAG Deployment)

Build a production-grade Document Intelligence Portal that can ingest large document collections and answer questions with grounded, citation-backed outputs. You’ll implement the full RAG pipeline — upload → parse → chunk → embed → index → retrieve → generate — with advanced features like query rewriting, MMR retrieval, re-ranking, multi-document chat, and document comparison. You’ll also ship it with Redis caching (CAG), evaluation + guardrails, and full deployment on AWS ECS/Fargate with CI/CD + observability.

Project 2: Autonomous Report Generation System (Multi-Agent Deployment)

Build a complete multi-agent AI system that researches, analyses, and generates structured reports like a real AI analyst team. You’ll design multiple agents (Search, Reader, Analyst, Generator, Coordinator) and orchestrate them using LangGraph / CrewAI / AutoGen-style state workflows, with shared memory, tool-calling, and RAG grounding for citation-aware results. The final system includes human-in-the-loop checkpoints, safe termination logic, and a deployable backend architecture with FastAPI dispatcher, report preview UI, and complete traces/logs for monitoring.

Course Curriculum

Foundations of Modern GenAI

- 1Introduction to Modern Generative AI & Large Language Models (LLMs): What GenAI is and how LLMs work at a high level

- 2Transformer Architecture (Core Concept): Why transformers are the backbone of modern LLMs

- 3Text Encoding & Tokenization: Why text must be encoded, tokenization basics, vocabulary creation, subword tokens

- 4Evolution of Text Representations: Classical encoding techniques and the shift to word embeddings

- 5Embeddings, Vector Space & Similarity: Word, contextual, and sentence embeddings, vector space representation, similarity measures

Learn from Industry Experts

Why Learn This Course

Go from LLM Basics to Production Engineer

Whether you’re new to LLMs or already experimenting, this bootcamp provides a structured path — from transformer foundations and embeddings to building complete GenAI applications with real deployment workflows across AWS/Azure.

Master the Full Generative AI Stack

This is not limited to prompting. You’ll master the entire modern GenAI stack: LLM APIs, fine-tuning (LoRA/QLoRA), RAG systems, agents, evaluation, guardrails, MCP, and cloud deployment — giving you end-to-end capability.

Build an Enterprise-Ready Portfolio

You’ll graduate with two end-to-end deployment projects: an Intelligent Document Portal (RAG) and an Autonomous Report Generation System (multi-agent). These are real portfolio projects that demonstrate production skills beyond basic demos.

Learn Production-Ready Deployment & LLMOps

You’ll learn how real GenAI systems are shipped: Dockerized APIs, scalable infrastructure, model endpoints, stateless services, caching, CI/CD pipelines, and observability — including AWS services like SageMaker, ECS/Fargate, S3, RDS and Azure equivalents.

Learn the Future of AI: Agents & Orchestration

The industry is moving from chatbots to autonomous systems. You’ll learn agent architectures — single-agent, multi-agent, deep agents — including tool calling, memory/state management, LangGraph-style orchestration, and human-in-the-loop workflows for safe autonomy.

Build Safe, Reliable AI (Evaluation + Guardrails)

Most GenAI apps fail due to hallucinations and unsafe outputs. This course teaches you how to build trustworthy systems using guardrails (prompt injection defence, I/O validation, schema enforcement) and evaluation methods like LLM-as-a-judge, RAG evaluation (faithfulness/relevance) and system-level cost/latency optimisation.

Skills You Will Acquire

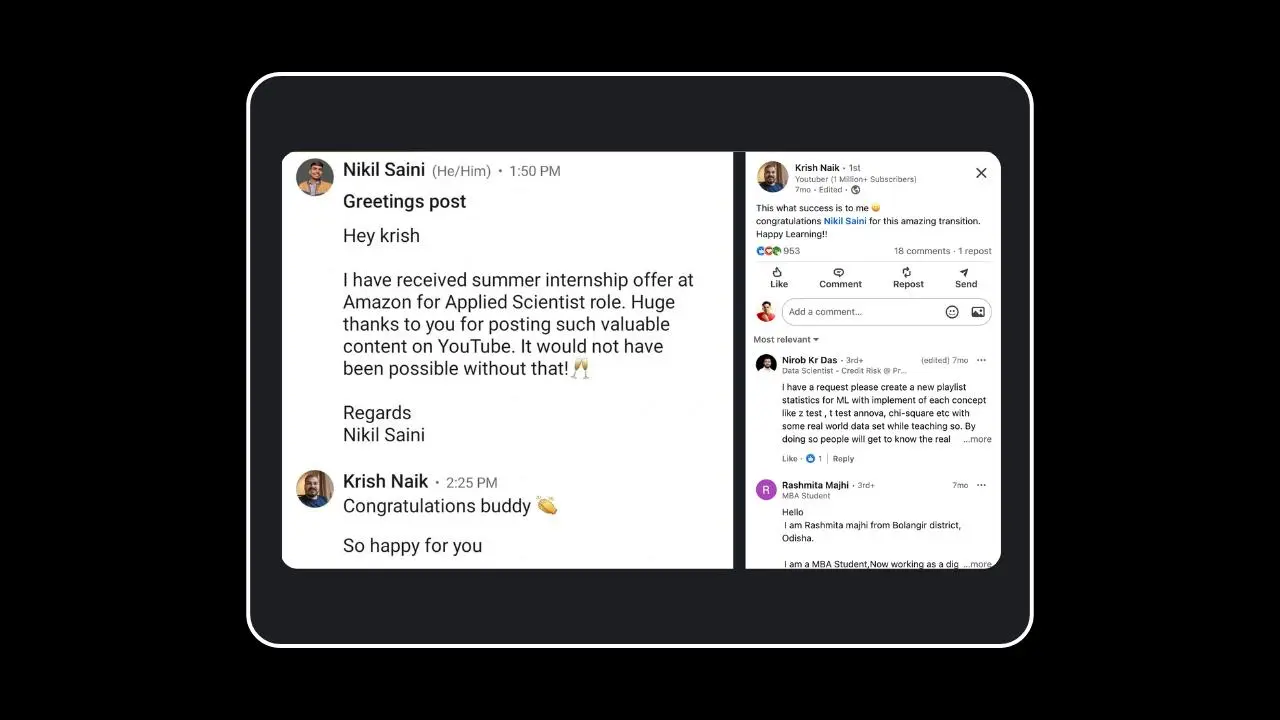

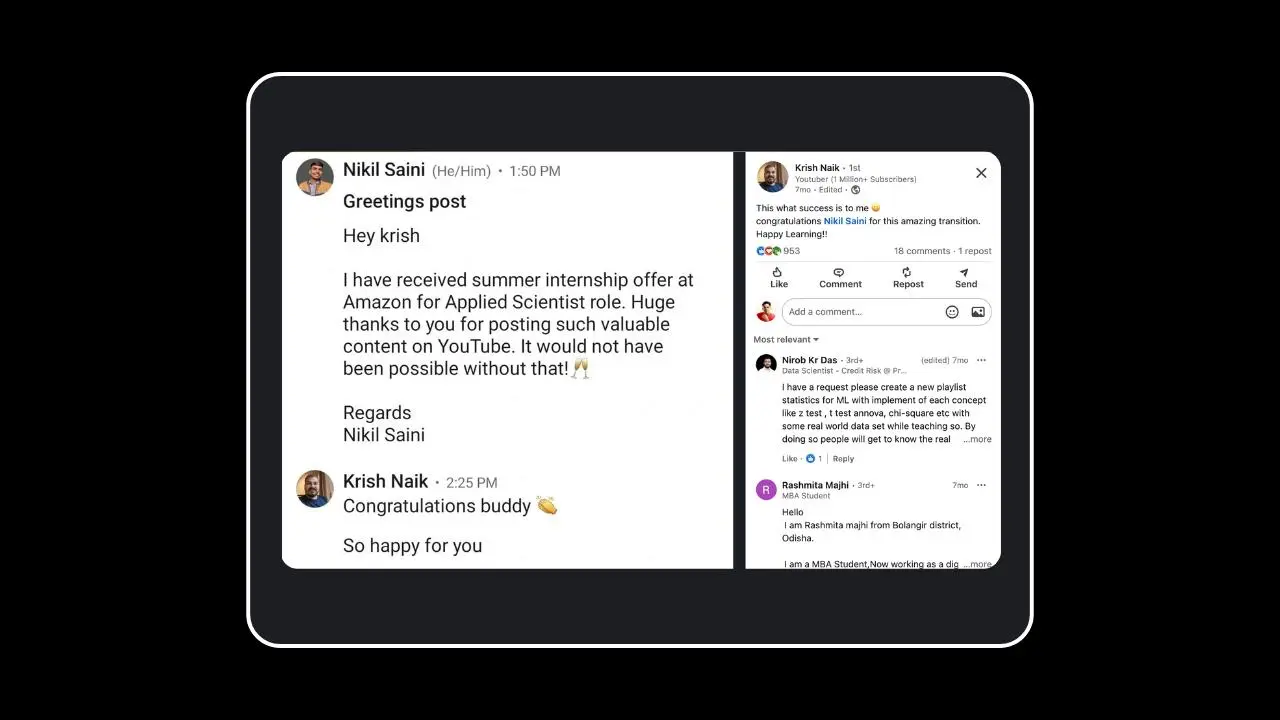

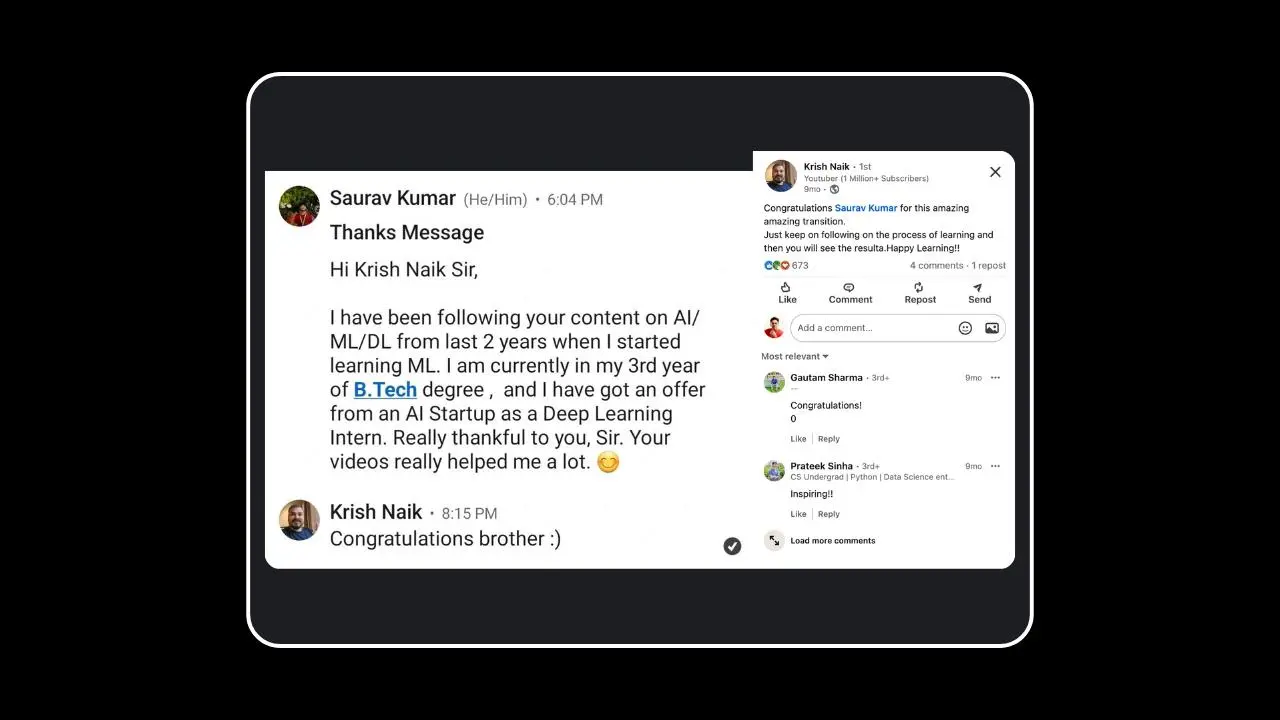

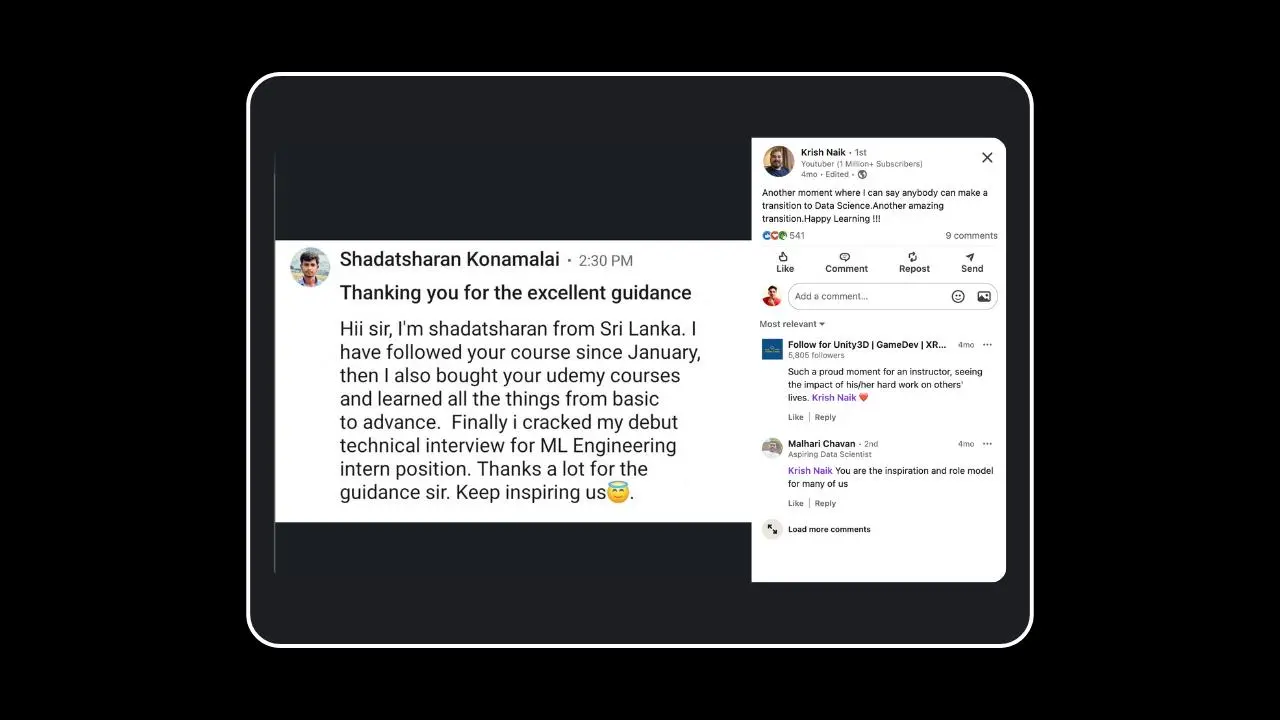

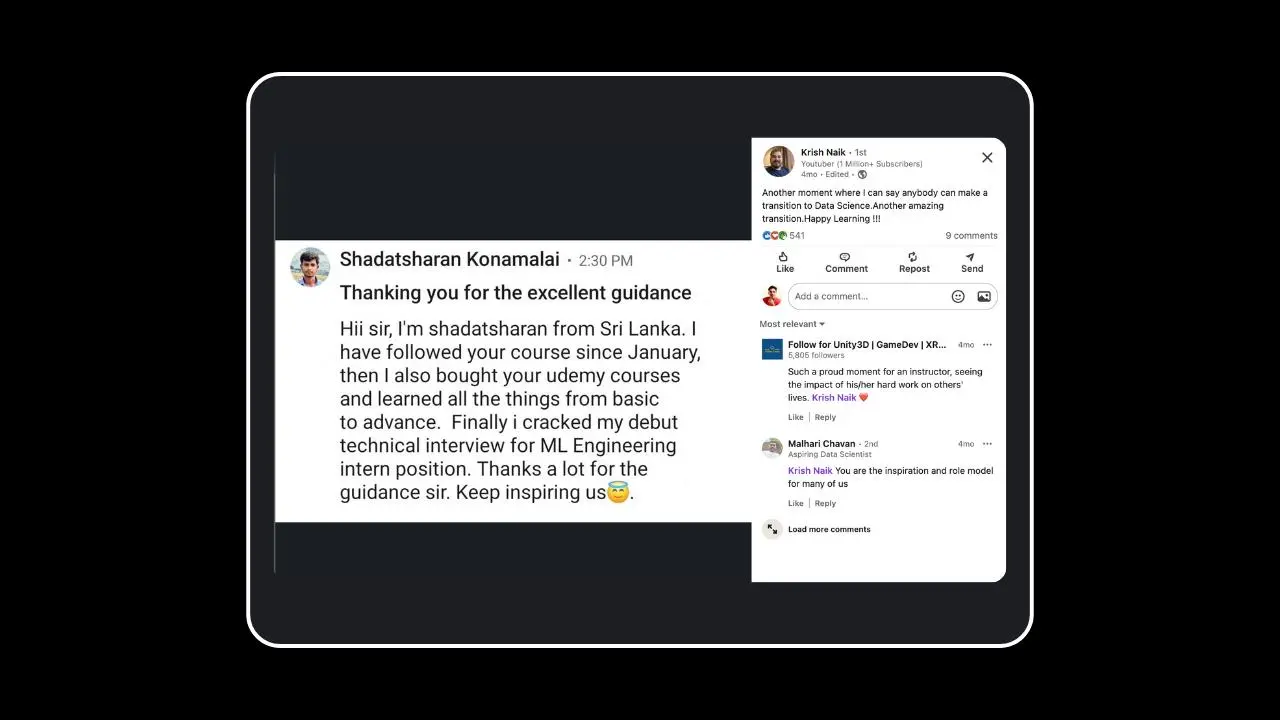

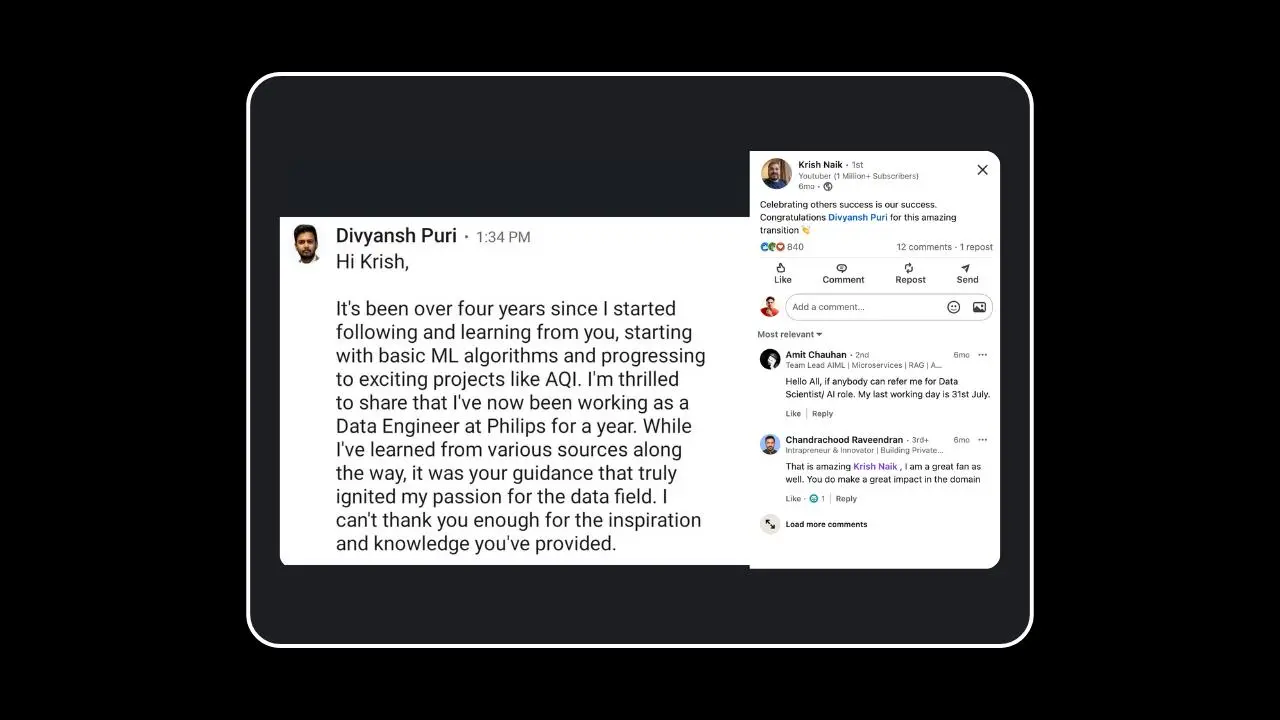

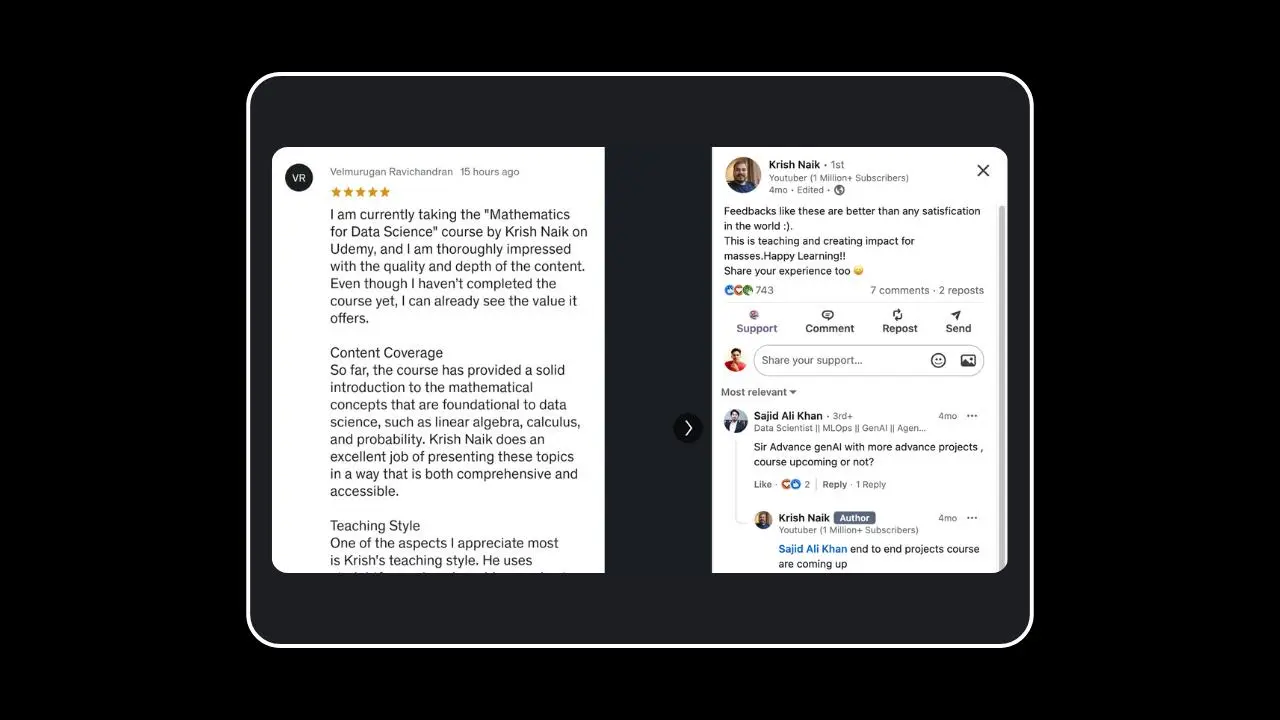

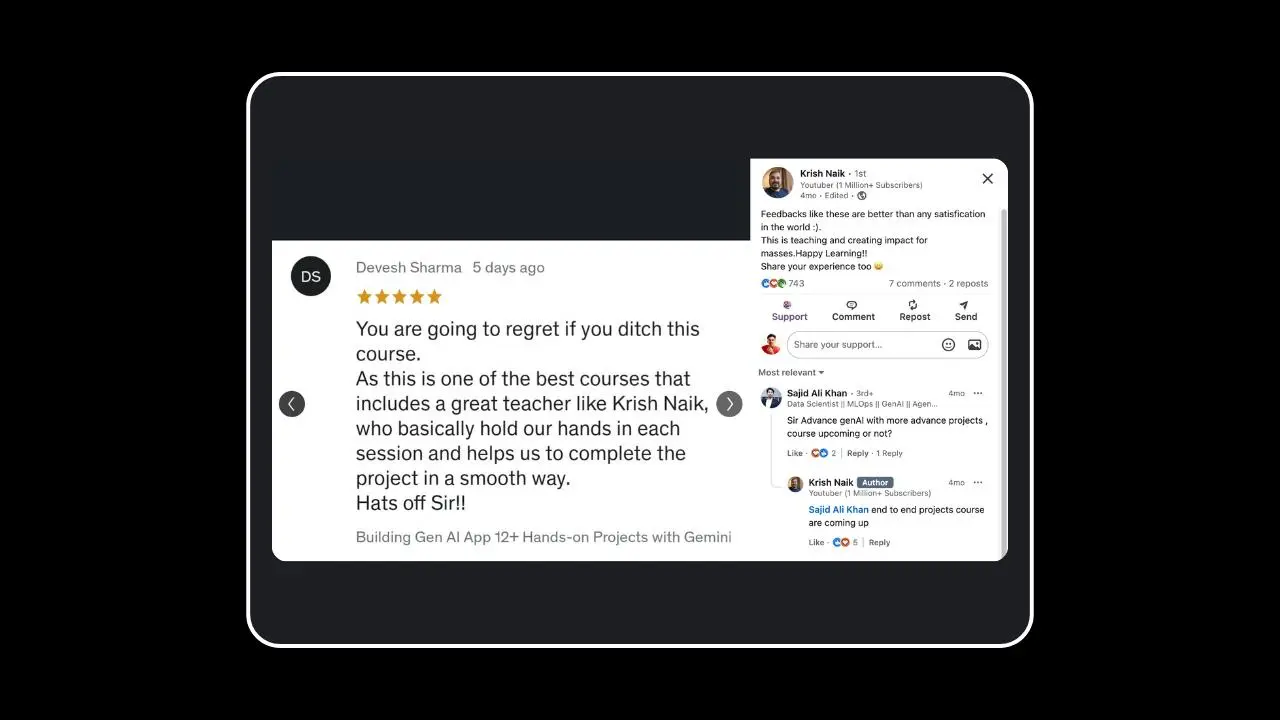

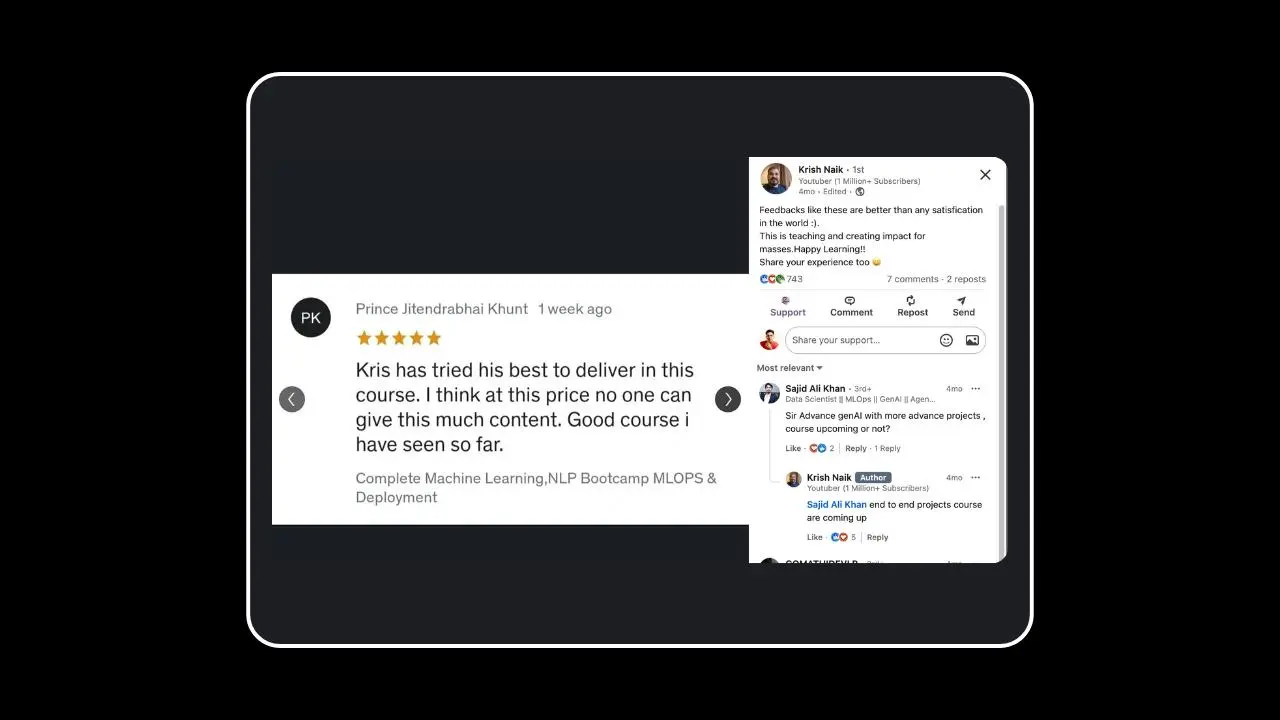

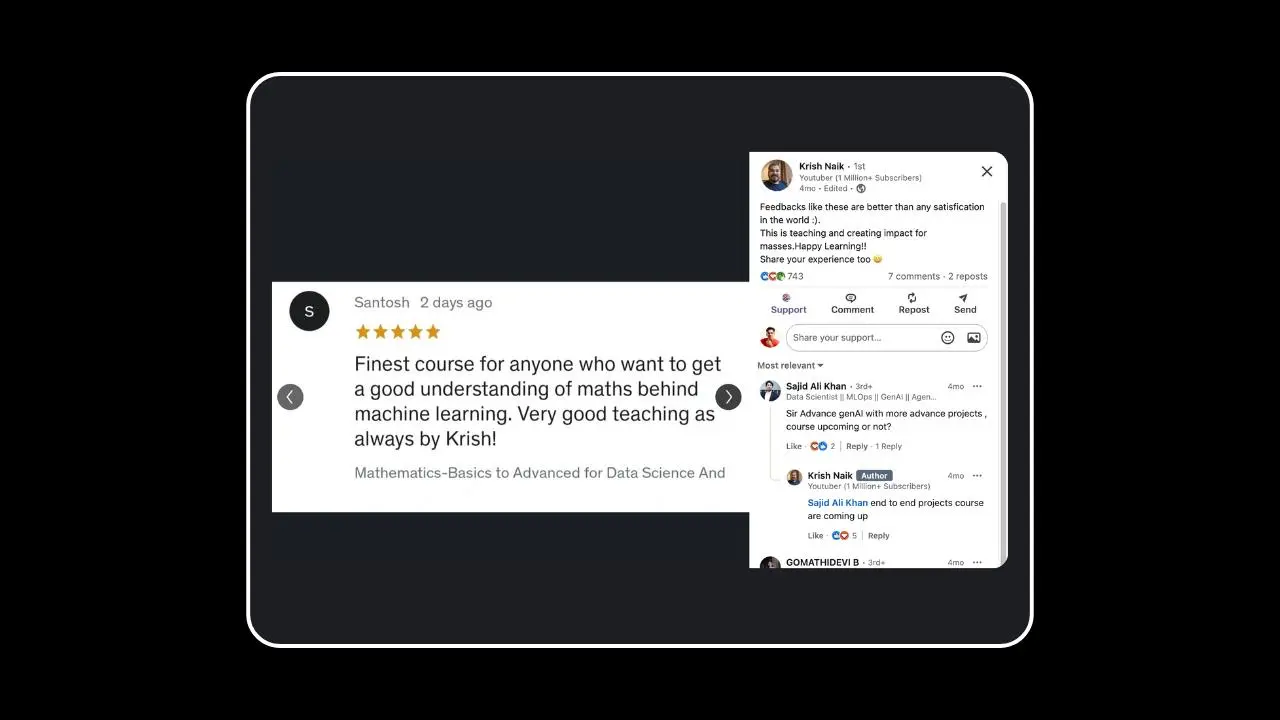

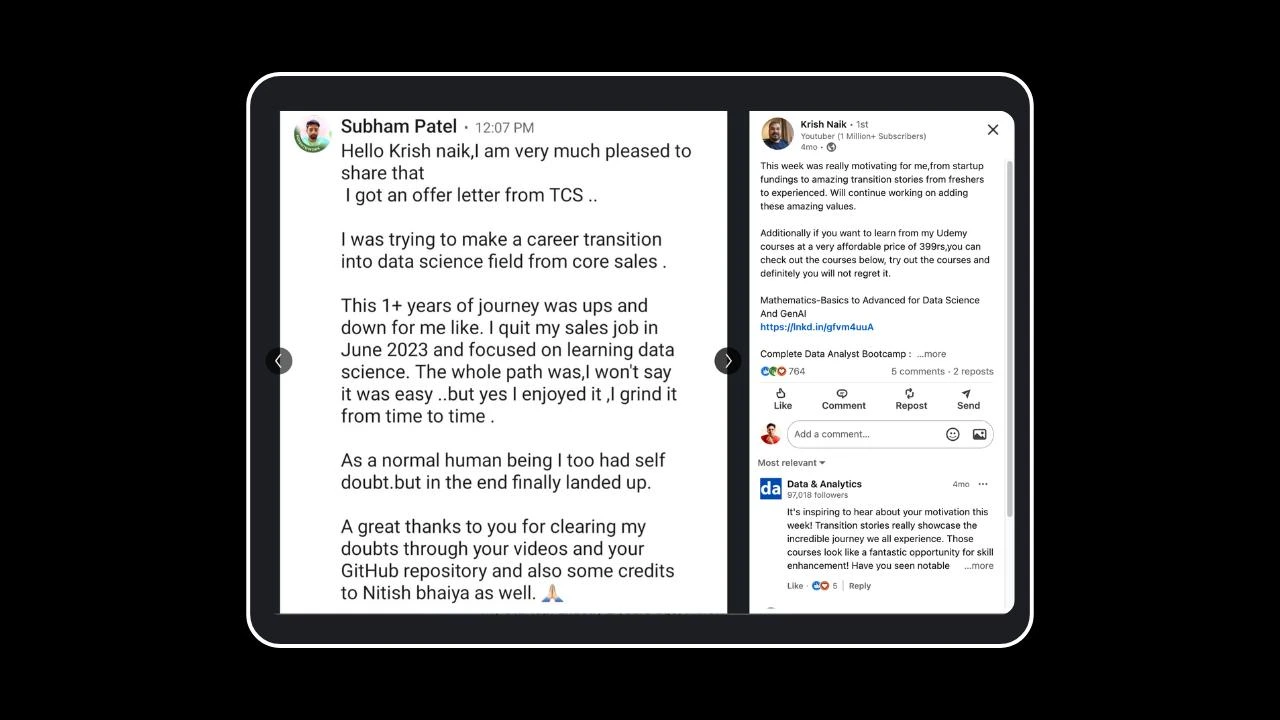

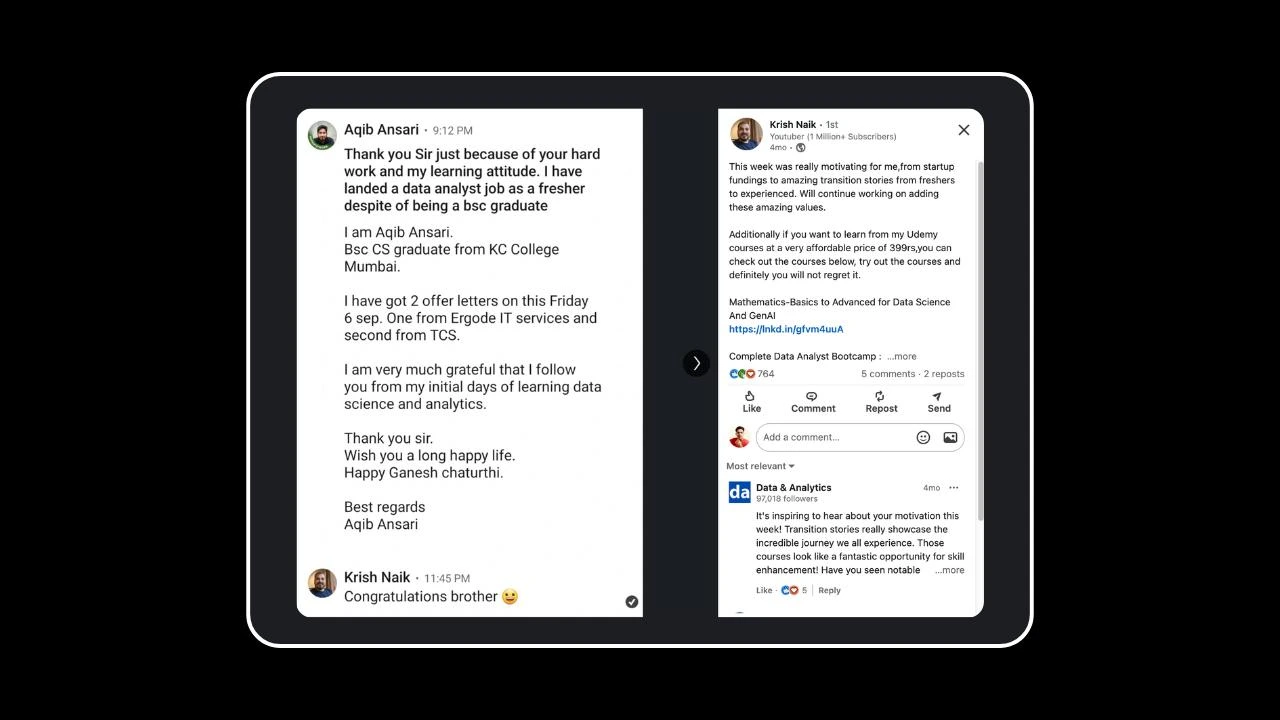

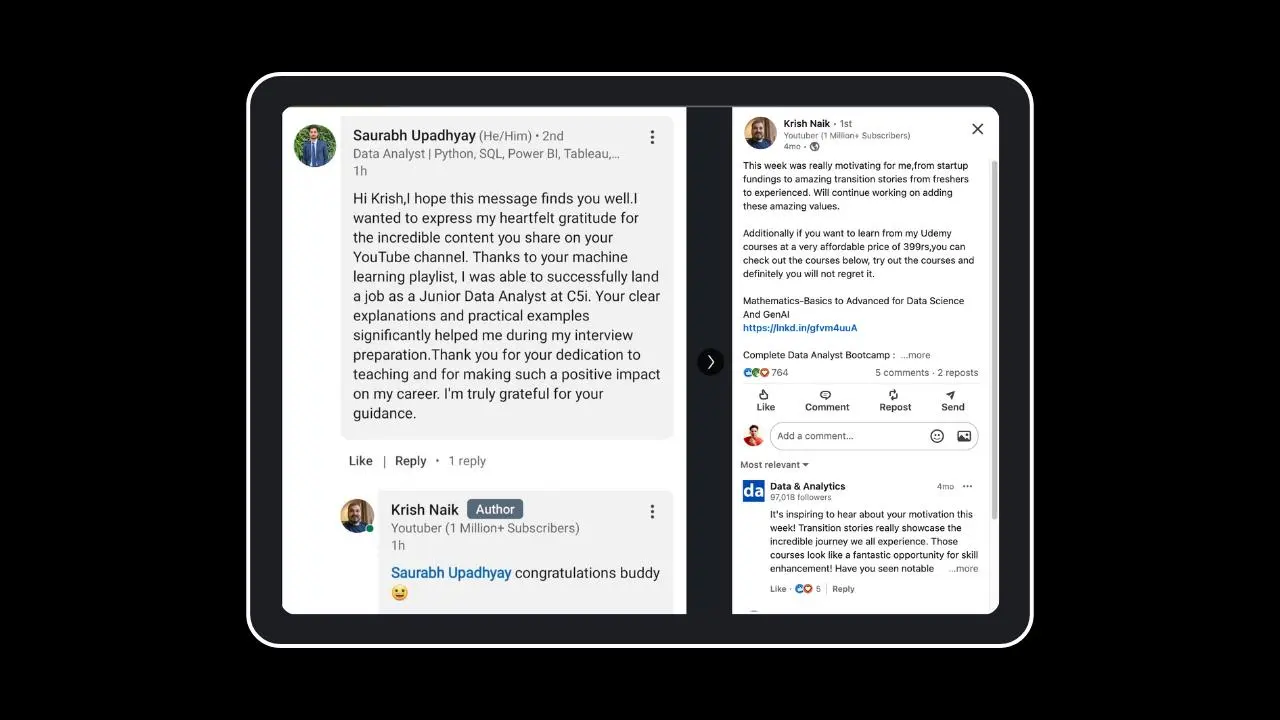

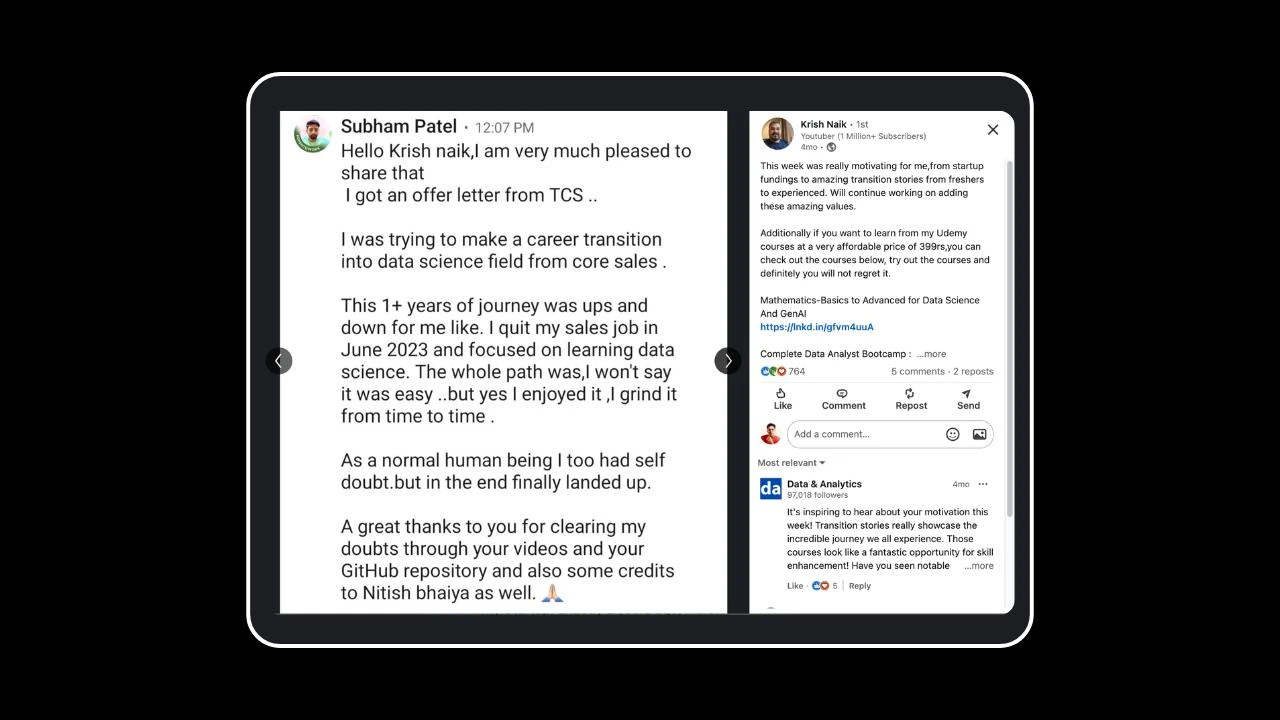

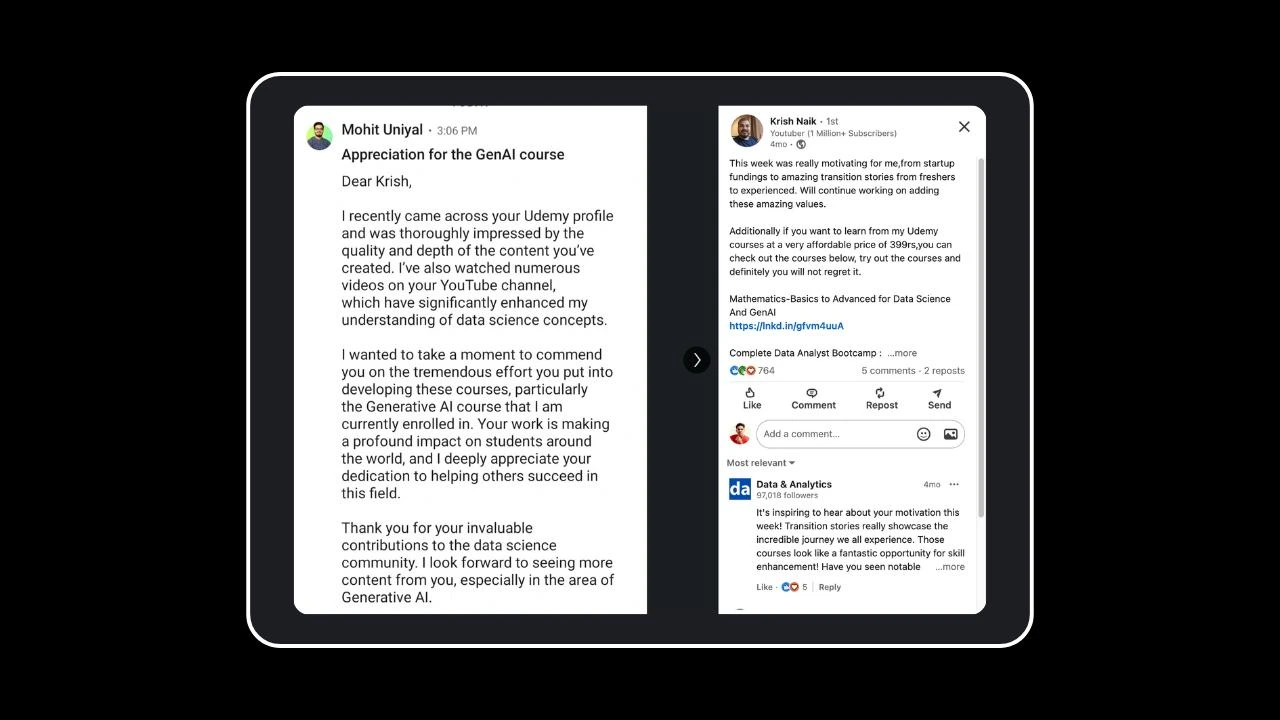

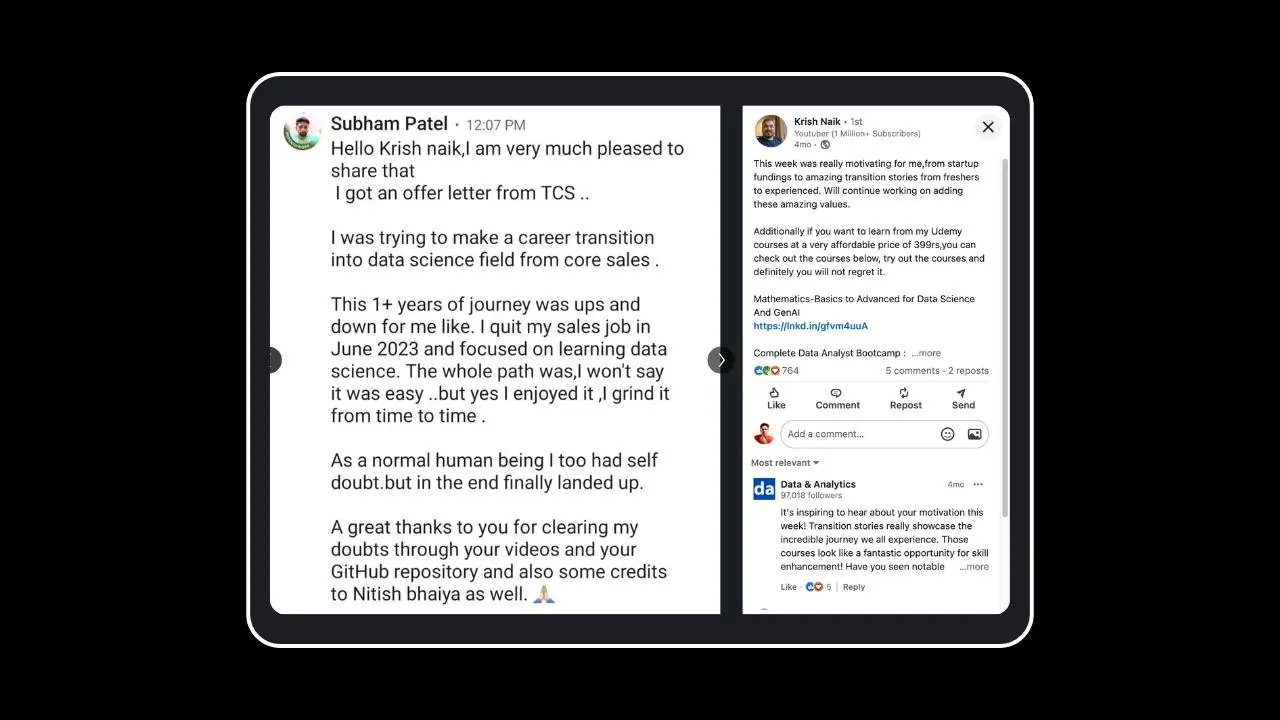

What Our Students Say

Frequently Asked Questions

Ready to Transform Your Career?

Join thousands of students mastering AI and Data Science with expert-led courses.

Enroll in This Course